Increasing cyber-crimes, virtualization, regulatory obligations, and a severe shortage of cyber and network security personnel are impacting organizations. Encryption, IT complexity, surface scraping and siloed information hinder security and network visibility.

Encryption has become the new normal, driven by privacy and security concerns. Enterprises are finding it increasingly more difficult to figure out what traffic is bad and what isn’t. Encryption’s exponential adoption has created a significant security visibility challenge globally. Threat actors are now using the lack of decryption to avoid detection.

Encrypted data cannot be analyzed, making network risks harder or impossible to see. More than 95% of internet traffic is now encrypted, denying Deep Packet Inspection (DPI) and other tools that use decrypted packets to inspect traffic and identify risks.

DPI and other techniques that decode packets to detect threats have traditionally been expensive to deploy and maintain and have now entered obsolescence.

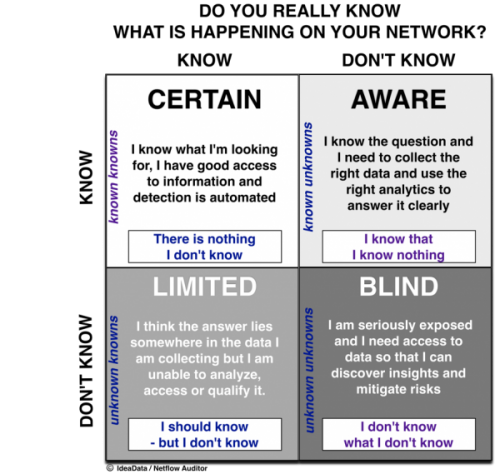

As the threat surface grows, organizations have less intelligence to identify and manage threats. Only 1% of network data is preserved by 99% of other kinds of network and cyber technologies causing severe network blindspots, leading security and networking professionals to ignore real dangers.

CySight provides the most precise cyber detection and forensics for on-premises and cloud networks. CySight has 20x more visibility than all of its competitors combined- substantially improving Security, Application visibility, Zero Trust and Billing. It provides a completely integrated, agentless, and scalable Network, Endpoint, Extended, Availability, Compliance, and Forensics solution- without packet decryption.

CySight uses Flow from most networking equipment. It compares traffic to global threat characteristics to detect infected hosts, Ransomware, DDoS, and other suspicious traffic. CySight’s integrated solution provides network, cloud, IoT, and endpoint security and visibility without packet decryption to detect and mitigate hazards.

Using readily available data sources, CySight records flows at unparalleled depth, in a compact footprint, correlating context and using Machine Learning, Predictive AI and Zero Trust micro segmentation. CySight identifies and addresses risks, triaging security behaviors and end-point threats with multi-focal telemetry, and contextual information to provide full risk detection and mitigation that other solutions cannot.